Since its inception in the earliest days of the internet (October 27, 1994, to be exact), digital advertising has evolved to open up a whole new world of lucrative sales opportunities predicated on marketing to the consumers with the highest conversion potential.

Simultaneously, it’s opened up a Pandora’s box of ethical concerns, as most advertisers have resorted to invasive practices to data-mine their target audience. Even more disturbing is that a USD 531 billion industry (as of 2022) is being built into a trillion-dollar industry on top of data from consumers who are often unaware they are being targeted.

This article will explore how we can successfully navigate the ethics of digital advertising in a data-driven world. We’ll discuss some of the main ethical challenges, the role of informed consent and transparency, AI and algorithms, the ethical boundaries for targeting and profiling, how to protect user data, and how to set ethical standards.

The Evolution of Digital Advertising

Digital advertising has evolved into a data-driven industry ever since AT&T purchased the first banner ad in the 90s. As early as 1995-1996, ad agencies began developing new tools to show companies which websites their target customers typically visited and how often their ads were viewed or clicked on.

Then, in 2006, Facebook entered the scene. The social media giant set the precedent of handing over users’ private information – i.e., demographics and content preferences – to advertisers. This enabled advertisers to directly target users they identified as potential customers on a more personal, intimate level.

Impact of data-driven practices

Since these developments, digital advertising has relied increasingly on data-driven practices to expand its reach and increase conversions. The result, however, is that advertisers are getting more intrusive. And consumers have begun to take notice. For example, it’s not uncommon for an internet user to search for a product on Google and then see an ad for that product the next time they log into Facebook.

Users are being tracked no matter where they go on the internet. It seems they can’t search for anything, sign up for anything, or purchase anything without this information getting mined by advertisers to sell them more goods and services.

For this reason and many others, digital advertising raises enormous ethical challenges.

Main Ethical Challenges in Digital Advertising Currently

Let’s talk about the main ethical challenges in digital advertising in 2023, starting with the state of the ethical landscape.

The state of the ethical landscape

Here’s how things stand. Digital advertising has become an issue of ethics on the stage of world government. It has led to passing laws like the General Data Protection Regulation (GDPR) in the European Union, the California Consumer Privacy Act (CCPA), and dozens of other laws stateside and across the globe. There has yet to be any sweeping legislation at the United States federal level. Still, a few bills have been proposed in Congress, like the Americans Data Privacy and Protection Act.

Privacy concerns and user rights

The biggest challenges that digital advertising poses to ethics are privacy concerns and user rights. Users are now in the habit of giving private information away without knowing it due to a lack of government regulation on how websites and advertisers acquire data and what they do with it. In many states in the US, companies are not obligated to tell you what they will do with your data once they have it. They could give it away or sell it to advertisers, which is why you’ll often see those targeted ads after surfing the internet.

Laws like the GDPR are designed to force advertisers to be transparent about data collection and make stronger efforts to obtain users’ consent to share their information. Some are also working on including biometric data and geographical locations under the umbrella of “sensitive information,” for which advertisers must obtain explicit user consent to access.

Exploitative practices vs. user consent

Unfortunately, though, asking for user consent is not the final answer. There are still ways that companies can exploit consumers, with or without consent.

For example, they can try to deceive you into clicking “I agree” to data collection by blocking certain information on a webpage until you do so. They can also use vague language such as, “We may share your information with third parties,” to convince you that giving them access to your information is not a big deal.

However, consent is still a critical part of solving the ethical dilemmas of digital advertising. The next section shows how that could be applied properly and without exploiting internet users.

The Role of Informed Consent and Transparency

Informed consent and transparency play a significant role in improving digital advertising ethics. We must consider the important factors when integrating that role for maximum impact.

The importance of clear communication

Saying that clear communication is important in digital advertising may sound too simple, but not so much when you consider that tangled webs of exploitation and privacy abuses are what got us here. In these times, the simplest solution is often best.

With clear communication, users will finally have real autonomy in choosing whether or not to share their data. It will enable laying out all the variables, all the pros and cons, so that users can decide in their best interests.

Challenges in obtaining genuine consent

However, obtaining this genuine consent can be challenging. Even if you’re completely transparent with the user, you may still get people consenting to their data being collected simply because they didn’t bother reading the terms and conditions on your website. Alternatively, they may refuse to consent because they distrust all attempts to gather information about them. This can happen even if you’ve made it clear that you have no intention of selling or sharing their information with third parties.

Solutions for enhancing transparency

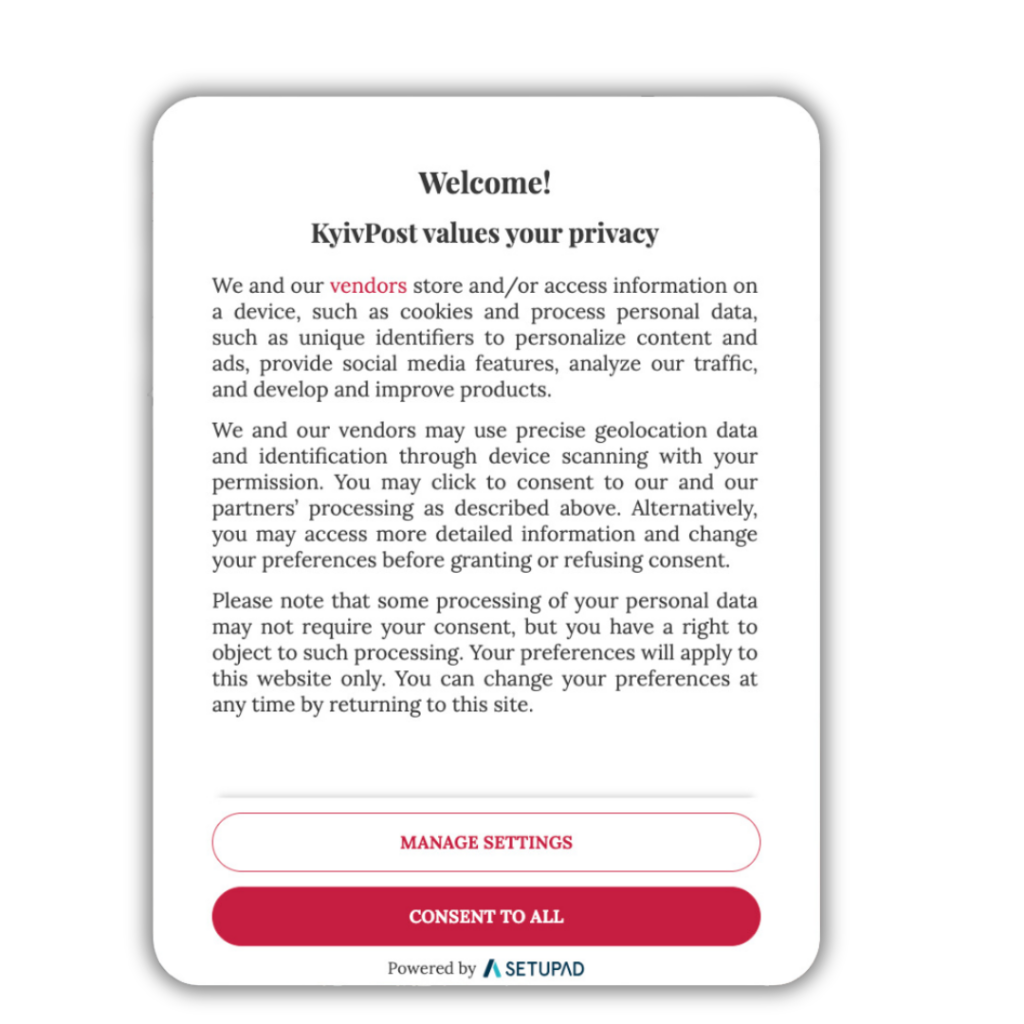

The answer to both situations is to enhance transparency; one is a consent management platform (CMP), a technology used by website owners to collect data from users. It complies with handling users’ personal information, such as meeting the General Data Protection Regulation (GDPR) and IAB Europe’s TCF (Transparency Consent Framework).

Some best practices for the language you should utilize in your CMP include:

- Requiring users to scroll through terms and conditions before agreeing to give you access to their data.

- Using language that is as open, honest, and clear as possible so that it’s easy to comprehend.

- Giving users options regarding which types of data they will allow you to track.

- Not targeting users on an individual level but using other types of marketing to reach your audience at large.

The latter solution brings us to another important topic: the ethical boundaries for targeting and profiling users. Let’s dive into it.

What are the Ethical Boundaries for Targeting and Profiling

The central theme of ethics in digital advertising has been data obtained through targeting and profiling individual users. Is there a way to perform these practices ethically so advertisers can still get the information they need to reach their target audience?

User profiling – the possible icebergs and how to do it right

User profiling has many hidden icebergs that digital advertisers and marketers can easily crash into if they’re not careful. For example, obtaining information about consumers’ ages, ethnicities, geographical locations, etc., is generally considered invasive. You may find that you increasingly have trouble getting consent for it, which may also lead to legal trouble.

But there is a way to do it right. Obtaining genuine consent via transparency is one way. Another is by relying on zero-party and first-party information. The former is information that customers willingly give to companies about themselves, and the latter is data you acquire from customer interactions with your website, app, and/or storefront.

A fine line to when targeting becomes discriminatory

There is a fine line where targeting can become discriminatory. According to the Consumer Financial Protection Bureau in the United States, discriminatory targeting is “the act of directing predatory or otherwise harmful products or practices at certain groups, neighborhoods, or parts of a community.” The Bureau uses the example of a nursing school that targeted members of specific races to get them to take out expensive loans to enroll in their program.

This is another ethical boundary for targeting, and it’s also a way that companies can find themselves embroiled in legal red tape. Care must be taken to ensure that this line is never crossed.

But beyond ethically obtaining (and using) data on internet users for digital advertising, there’s something else that companies should be equally concerned about protecting the data once they have it.

Data Security and Breaches – How to Protect User Data

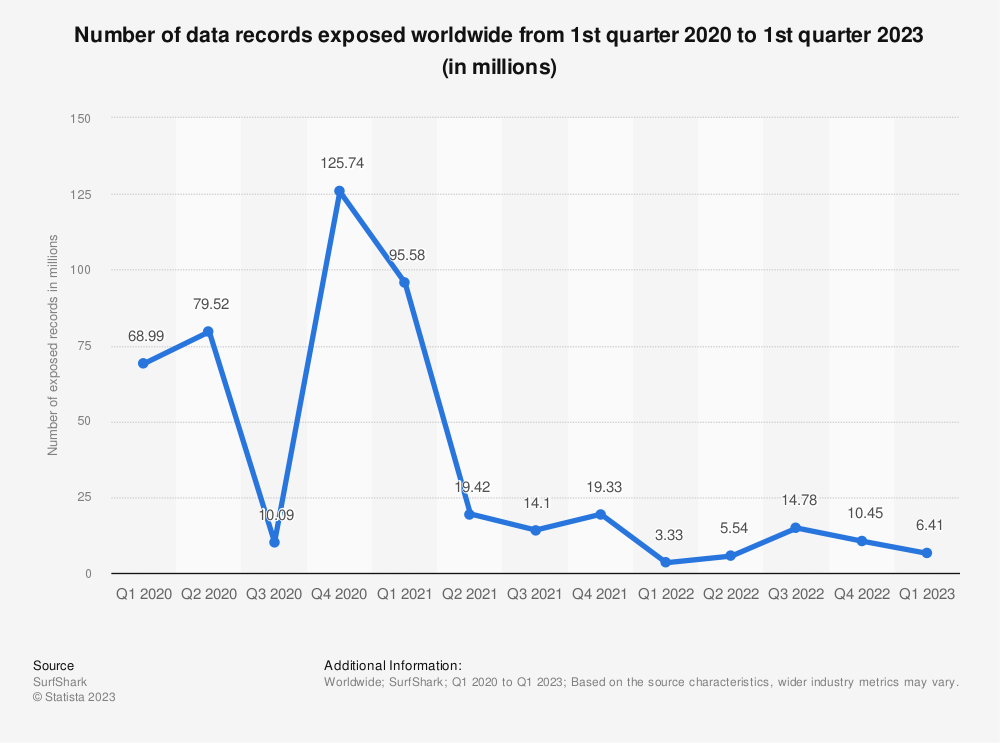

In Q1 2023 alone, digital security breaches approximately 6.41 million data records exposed worldwide. Such records can include individuals’ birth dates, social security numbers, credit card numbers, email addresses, etc.

Source: Statista

Users take a big risk when they share personal data with a company. No matter how transparent and ethical the company is with that data, there is always the possibility that it will be leaked out when its security is undermined. And it is almost an inevitability that will be undermined; according to an IBM Data Breach Report, 83% of organizations experienced multiple data breaches in 2022 alone.

Protecting user data typically comes down to ramping up existing security measures and training everyone in proper data management and safety practices. Many data breaches occur due to internal negligence, so addressing those gaps first will allow for a stronger defense.

Another way to protect data is by putting less of it in the hands of fallible human beings and relying more on artificial intelligence (AI) automation technologies. But that road is paved with plenty of pros and cons, as we’ll see in the next section.

The Role of AI and Algorithms in the Digital Advertising Context

AI and machine learning algorithms have swiftly become the digital advertiser’s best tools. They can help optimize campaigns, reveal insights about target users faster, and generate entire advertisements from a few prompts, among other useful applications.

But is that a good thing with respect to ethics? Let’s find out.

AI-driven advertising – pros and cons

Here are the pros and cons of AI-driven advertising.

Pros

- Automates tedious tasks, thus reducing advertisers’ workloads

- Process huge volumes of data in record time

- Learns and predicts consumer behavior

- Can rely on first-party and zero-party data

Cons

- Poses potential legal concerns

- May yield inaccurate or wrong information

- This opens advertisers to the risk of discriminatory targeting

- This can lead to inhuman, robotic content that repels human users

Ethical challenges in algorithmic advertising

As you can see from our pros and cons list, algorithmic advertising has a few glaring ethical challenges. The biggest one is that it exposes advertisers to discriminatory targeting if they rely too heavily on their AI tools. Furthermore, as AI, in general, becomes the target of legislation, it may be a short time before digital advertisers come up against legal problems.

Balancing automation with ethics

But is automation, in general, a bad thing in advertising? Not necessarily. It must simply be balanced with ethics. To do that, it’s important to view AI and machine learning as tools for advertisers, not replacements. It’s also critical to remember that these are imperfect instruments, and their findings should always be verified and tested.

Setting the Ethical Standard

With all these considerations, it’s clear that we need to set ethical standards for digital advertising. Here’s what that might look like on two levels: industry bodies, regulators, and advertising agencies.

The role of industry bodies and regulators

Industry bodies and regulators should be at the vanguard of setting an ethical standard in digital advertising. We’re already beginning to see this in legislation like the GDPR, the CCPA, etc. That’s a great start, but there’s still a long way to go, particularly on the federal level in the United States.

Best practices for ethical digital advertising

Meanwhile, advertisers and ad agencies can implement ethical digital advertising immediately. Here are some of the best practices that can help these entities do better:

- Communicating your intentions with customers.

- Asking for explicit consent to collect and use customer data.

- Giving users options to accept or decline certain types of data collection or use cases.

- Using only first-party and zero-party data.

- Utilizing contextual advertising.

- Using AI and automation as tools, not as replacement advertising.

- Avoiding discriminatory targeting.

- Remaining in compliance with digital privacy regulations in your geographic location.

Conclusion

In summary, data-driven digital advertising poses several ethical dilemmas that can’t be handwaved away. As legislation continues to pour out to defend users’ rights to data privacy, ad agencies must adopt more privacy-friendly data collection and security practices.

Leave a comment!